AMASS:

Archive of Motion Capture As Surface Shapes

Naureen Mahmood, Nima Ghorbani, Nikolaus F. Troje, Gerard Pons-Moll, Michael J. Black

ICCV 2019

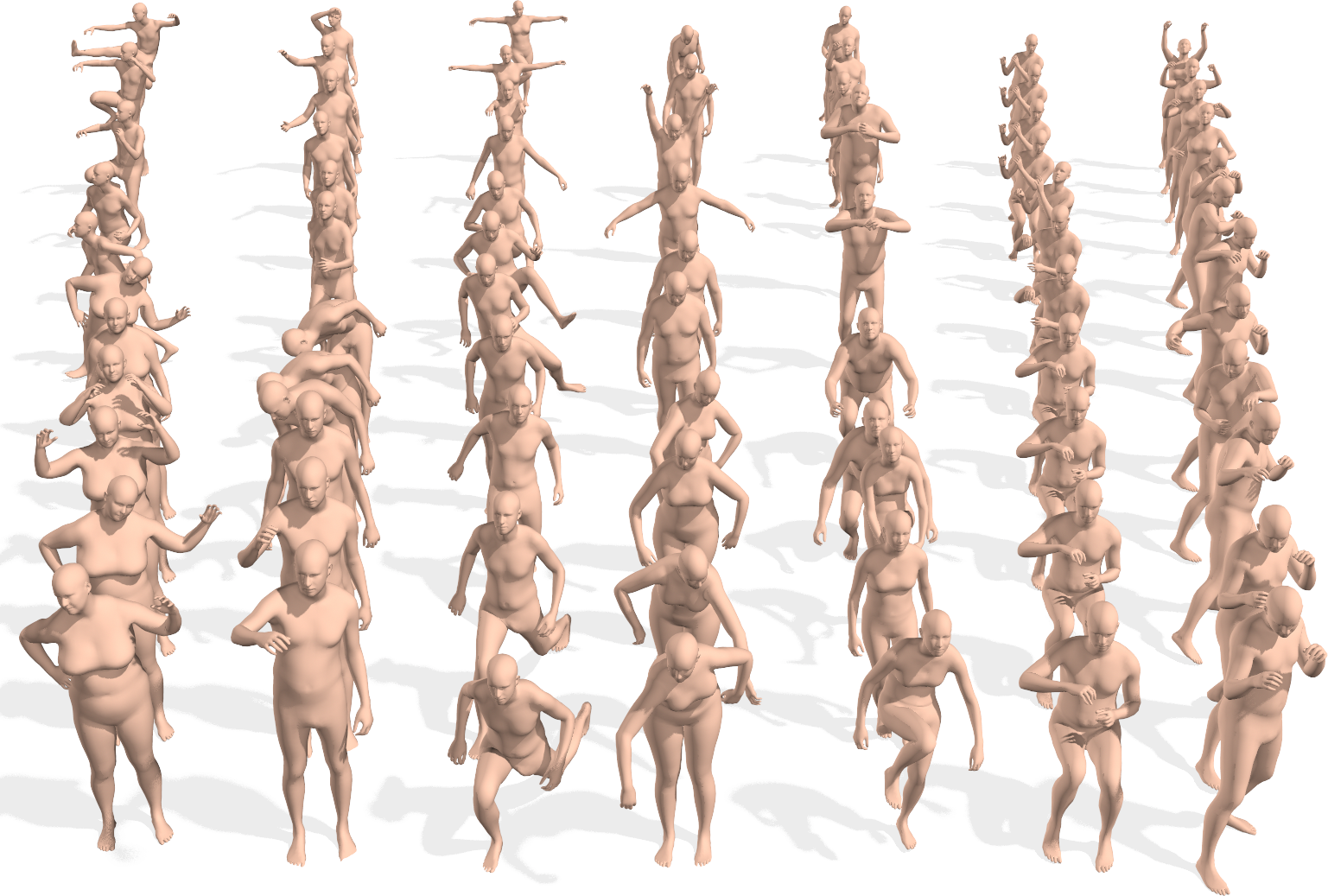

AMASS is a large database of human motion unifying different optical marker-based motion capture datasets by representing them within a common framework and parameterization. AMASS is readily useful for animation, visualization, and generating training data for deep learning.

Abstract

Large datasets are the cornerstone of recent advances in computer vision using deep learning. In contrast, existing human motion capture (mocap) datasets are small and the motions limited, hampering progress on learning models of human motion. While there are many different datasets available, they each use a different parameterization of the body, making it difficult to integrate them into a single meta dataset. To address this, we introduce AMASS, a large and varied database of human motion that unifies 15 different optical marker-based mocap datasets by representing them within a common framework and parameterization. We achieve this using a new method, MoSh++, that converts mocap data into realistic 3D human meshes represented by a rigged body model. Here we use SMPL [26], which is widely used and provides a standard skeletal representation as well as a fully rigged surface mesh. The method works for arbitrary marker sets, while recovering soft-tissue dynamics and realistic hand motion. We evaluate MoSh++ and tune its hyperparameters using a new dataset of 4D body scans that are jointly recorded with markerbased mocap. The consistent representation of AMASS makes it readily useful for animation, visualization, and generating training data for deep learning. Our dataset is significantly richer than previous human motion collections, having more than 40 hours of motion data, spanning over 300 subjects, more than 11000 motions, and is available for research at https://amass.is.tue.mpg.de/.

Video

Download

We provide the body model parameters corresponding to each motion capture sequence in the included datasets, along with tutorial code to visualize the data and basic tools to use it in deep learning tasks.

Before you proceed with downloads you have to register here.

Referencing the AMASS Dataset

@conference{AMASS:ICCV:2019,

title = {{AMASS}: Archive of Motion Capture as Surface Shapes},

author = {Mahmood, Naureen and Ghorbani, Nima and Troje, Nikolaus F. and Pons-Moll, Gerard and Black, Michael J.},

booktitle = {International Conference on Computer Vision},

pages = {5442--5451},

month = oct,

year = {2019},

month_numeric = {10}

}

To cite all the datasets in AMASS, please download this bib file. For your reference, you can cite them all as

\include{amass.bib}

...

\cite{….}

Contribute to AMASS

The research community needs more human motion data. If you have interesting motion capture data, and you are willing to share it for research purposes, then we will MoSh it for you and add it to the AMASS dataset. To contribute, please contact amass@tue.mpg.de

Contact

Hosted and created by Perceiving Systems, part of the Max Planck Institute for Intelligent Systems.